Felix Ferdinand Goldau; Max Pascher; Annalies Baumeister; Patrizia Tolle; Jens Gerken; Udo Frese

Adaptive Control in Assistive Application – A Study Evaluating Shared Control by Users with Limited Upper Limb Mobility Proceedings Article Forthcoming

In: RO-MAN 2024 - IEEE International Conference on Robot and Human Interactive Communication, IEEE, Forthcoming.

@inproceedings{Goldau2024,

title = {Adaptive Control in Assistive Application – A Study Evaluating Shared Control by Users with Limited Upper Limb Mobility},

author = {Felix Ferdinand Goldau and Max Pascher and Annalies Baumeister and Patrizia Tolle and Jens Gerken and Udo Frese},

doi = {10.48550/arXiv.2406.06103},

year = {2024},

date = {2024-08-26},

urldate = {2024-08-26},

booktitle = {RO-MAN 2024 - IEEE International Conference on Robot and Human Interactive Communication},

publisher = {IEEE},

abstract = {Shared control in assistive robotics blends human autonomy with computer assistance, thus simplifying complex tasks for individuals with physical impairments. This study assesses an adaptive Degrees of Freedom control method specifically tailored for individuals with upper limb impairments. It employs a between-subjects analysis with 24 participants, conducting 81 trials across three distinct input devices in a realistic everyday-task setting. Given the diverse capabilities of the vulnerable target demographic and the known challenges in statistical comparisons due to individual differences, the study focuses primarily on subjective qualitative data. The results reveal consistently high success rates in trial completions, irrespective of the input device used. Participants appreciated their involvement in the research process, displayed a positive outlook, and quick adaptability to the control system. Notably, each participant effectively managed the given task within a short time frame. },

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

Max Pascher; Felix Ferdinand Goldau; Kirill Kronhardt; Udo Frese; Jens Gerken

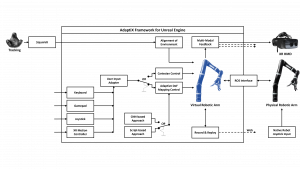

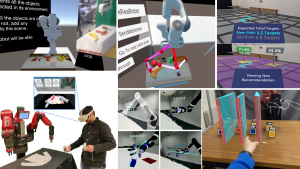

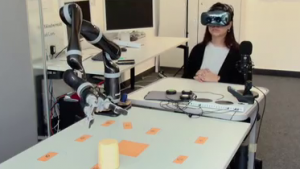

AdaptiX – A Transitional XR Framework for Development and Evaluation of Shared Control Applications in Assistive Robotics Best Paper Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 8, no. EICS, 2024, ISSN: 2573-0142.

@article{Pascher.2024,

title = {AdaptiX – A Transitional XR Framework for Development and Evaluation of Shared Control Applications in Assistive Robotics},

author = {Max Pascher and Felix Ferdinand Goldau and Kirill Kronhardt and Udo Frese and Jens Gerken},

url = {https://github.com/maxpascher/AdaptiX, AdaptiX GitHub repository },

doi = {10.1145/3660243},

issn = {2573-0142},

year = {2024},

date = {2024-06-17},

urldate = {2024-06-17},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {8},

number = {EICS},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {With the ongoing efforts to empower people with mobility impairments and the increase in technological acceptance by the general public, assistive technologies, such as collaborative robotic arms, are gaining popularity. Yet, their widespread success is limited by usability issues, specifically the disparity between user input and software control along the autonomy continuum. To address this, shared control concepts provide opportunities to combine the targeted increase of user autonomy with a certain level of computer assistance. This paper presents the free and open-source AdaptiX XR framework for developing and evaluating shared control applications in a high-resolution simulation environment. The initial framework consists of a simulated robotic arm with an example scenario in Virtual Reality (VR), multiple standard control interfaces, and a specialized recording/replay system. AdaptiX can easily be extended for specific research needs, allowing Human-Robot Interaction (HRI) researchers to rapidly design and test novel interaction methods, intervention strategies, and multi-modal feedback techniques, without requiring an actual physical robotic arm during the early phases of ideation, prototyping, and evaluation. Also, a Robot Operating System (ROS) integration enables the controlling of a real robotic arm in a PhysicalTwin approach without any simulation-reality gap. Here, we review the capabilities and limitations of AdaptiX in detail and present three bodies of research based on the framework. AdaptiX can be accessed at https://adaptix.robot-research.de.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Younes Lakhnati; Max Pascher; Jens Gerken

Exploring a GPT-based Large Language Model for Variable Autonomy in a VR-based Human-Robot Teaming Simulation Journal Article

In: Frontiers in Robotics and AI, vol. 11, 2024.

@article{Lakhnati2024,

title = {Exploring a GPT-based Large Language Model for Variable Autonomy in a VR-based Human-Robot Teaming Simulation},

author = {Younes Lakhnati and Max Pascher and Jens Gerken},

editor = {Andreas Theodorou},

doi = {10.3389/frobt.2024.1347538},

year = {2024},

date = {2024-04-03},

urldate = {2024-04-02},

journal = {Frontiers in Robotics and AI},

volume = {11},

abstract = {In a rapidly evolving digital landscape autonomous tools and robots are becoming commonplace. Recognizing the significance of this development, this paper explores the integration of Large Language Models (LLMs) like Generative pre-trained transformer (GPT) into human-robot teaming environments to facilitate variable autonomy through the means of verbal human-robot communication. In this paper, we introduce a novel simulation framework for such a GPT-powered multi-robot testbed environment, based on a Unity Virtual Reality (VR) setting. This system allows users to interact with simulated robot agents through natural language, each powered by individual GPT cores. By means of OpenAI's function calling, we bridge the gap between unstructured natural language input and structured robot actions. A user study with 12 participants explores the effectiveness of GPT-4 and, more importantly, user strategies when being given the opportunity to converse in natural language within a simulated multi-robot environment. Our findings suggest that users may have preconceived expectations on how to converse with robots and seldom try to explore the actual language and cognitive capabilities of their simulated robot collaborators. Still, those users who did explore where able to benefit from a much more natural flow of communication and human-like back-and-forth. We provide a set of lessons learned for future research and technical implementations of similar systems},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Amal Nanavati; Max Pascher; Vinitha Ranganeni; Ethan K. Gordon; Taylor Kessler Faulkner; Siddhartha S. Srinivasa; Maya Cakmak; Patrícia Alves-Oliveira; Jens Gerken

Multiple Ways of Working with Users to Develop Physically Assistive Robots Proceedings Article

In: A3DE '24: Workshop on Assistive Applications, Accessibility, and Disability Ethics at the ACM/IEEE International Conference on Human-Robot Interaction, 2024.

@inproceedings{Nanavati2024,

title = {Multiple Ways of Working with Users to Develop Physically Assistive Robots},

author = {Amal Nanavati and Max Pascher and Vinitha Ranganeni and Ethan K. Gordon and Taylor Kessler Faulkner and Siddhartha S. Srinivasa and Maya Cakmak and Patrícia Alves-Oliveira and Jens Gerken},

doi = {10.48550/arXiv.2403.00489},

year = {2024},

date = {2024-03-15},

urldate = {2024-03-15},

booktitle = {A3DE '24: Workshop on Assistive Applications, Accessibility, and Disability Ethics at the ACM/IEEE International Conference on Human-Robot Interaction},

abstract = {Despite the growth of physically assistive robotics (PAR) research over the last decade, nearly half of PAR user studies do not involve participants with the target disabilities. There are several reasons for this---recruitment challenges, small sample sizes, and transportation logistics---all influenced by systemic barriers that people with disabilities face. However, it is well-established that working with end-users results in technology that better addresses their needs and integrates with their lived circumstances. In this paper, we reflect on multiple approaches we have taken to working with people with motor impairments across the design, development, and evaluation of three PAR projects: (a) assistive feeding with a robot arm; (b) assistive teleoperation with a mobile manipulator; and (c) shared control with a robot arm. We discuss these approaches to working with users along three dimensions---individual- vs. community-level insight, logistic burden on end-users vs. researchers, and benefit to researchers vs. community---and share recommendations for how other PAR researchers can incorporate users into their work.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Maciej K. Wozniak; Max Pascher; Bryce Ikeda; Matthew B. Luebbers; Ayesha Jena

Virtual, Augmented, and Mixed Reality for Human-Robot Interaction (VAM-HRI) Proceedings Article

In: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA, 2024, ISBN: 979-8-4007-0323-2/24/03.

@inproceedings{Wozniak2024,

title = { Virtual, Augmented, and Mixed Reality for Human-Robot Interaction (VAM-HRI)},

author = {Maciej K. Wozniak and Max Pascher and Bryce Ikeda and Matthew B. Luebbers and Ayesha Jena},

url = {https://vam-hri.github.io/, VAM-HRI workshop website},

doi = {10.1145/3610978.3638158},

isbn = {979-8-4007-0323-2/24/03},

year = {2024},

date = {2024-03-11},

urldate = {2024-03-11},

booktitle = {Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA},

abstract = {The 7𝑡ℎ International Workshop on Virtual, Augmented, and Mixed Reality for Human-Robot Interaction (VAM-HRI) seeks to bring together researchers from human-robot interaction (HRI), robotics, and mixed reality (MR) to address the challenges related to mixed reality interactions between humans and robots. Key topics include the development of robots capable of interacting with humans in mixed reality, the use of virtual reality for creating interactive robots, designing augmented reality interfaces for communication between humans and robots, exploring mixed reality interfaces for enhancing robot learning, comparative analysis of the capabilities and perceptions of robots and virtual agents, and sharing best design practices. VAM-HRI 2024 will build on the success of VAM-HRI workshops held from 2018 to 2023, advancing research in this specialized community. The prior year’s website is located at https://vam-hri.github.io.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Max Pascher; Alia Saad; Jonathan Liebers; Roman Heger; Jens Gerken; Stefan Schneegass; Uwe Gruenefeld

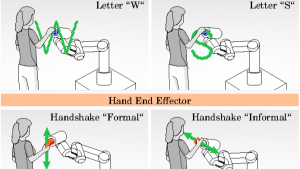

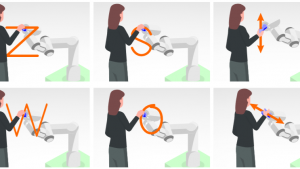

Hands-On Robotics: Enabling Communication Through Direct Gesture Control Proceedings Article

In: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA, 2024, ISBN: 979-8-4007-0323-2/24/03.

@inproceedings{Pascher2024,

title = {Hands-On Robotics: Enabling Communication Through Direct Gesture Control},

author = {Max Pascher and Alia Saad and Jonathan Liebers and Roman Heger and Jens Gerken and Stefan Schneegass and Uwe Gruenefeld},

doi = {10.1145/3610978.3640635},

isbn = {979-8-4007-0323-2/24/03},

year = {2024},

date = {2024-03-11},

urldate = {2024-03-11},

booktitle = {Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA},

abstract = {Effective Human-Robot Interaction (HRI) is fundamental to seamlessly integrating robotic systems into our daily lives. However, current communication modes require additional technological interfaces, which can be cumbersome and indirect. This paper presents a novel approach, using direct motion-based communication by moving a robot's end effector. Our strategy enables users to communicate with a robot by using four distinct gestures -- two handshakes ('formal' and 'informal') and two letters ('W' and 'S'). As a proof-of-concept, we conducted a user study with 16 participants, capturing subjective experience ratings and objective data for training machine learning classifiers. Our findings show that the four different gestures performed by moving the robot's end effector can be distinguished with close to 100% accuracy. Our research offers implications for the design of future HRI interfaces, suggesting that motion-based interaction can empower human operators to communicate directly with robots, removing the necessity for additional hardware.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Max Pascher; Kevin Zinta; Jens Gerken

Exploring of Discrete and Continuous Input Control for AI-enhanced Assistive Robotic Arms Proceedings Article

In: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA, 2024, ISBN: 979-8-4007-0323-2/24/03.

@inproceedings{Pascher2024c,

title = {Exploring of Discrete and Continuous Input Control for AI-enhanced Assistive Robotic Arms},

author = {Max Pascher and Kevin Zinta and Jens Gerken},

doi = {10.1145/3610978.3640626},

isbn = {979-8-4007-0323-2/24/03},

year = {2024},

date = {2024-03-11},

urldate = {2024-03-11},

booktitle = {Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA},

abstract = {Robotic arms, integral in domestic care for individuals with motor impairments, enable them to perform Activities of Daily Living (ADLs) independently, reducing dependence on human caregivers. These collaborative robots require users to manage multiple Degrees-of-Freedom (DoFs) for tasks like grasping and manipulating objects. Conventional input devices, typically limited to two DoFs, necessitate frequent and complex mode switches to control individual DoFs. Modern adaptive controls with feed-forward multi-modal feedback reduce the overall task completion time, number of mode switches, and cognitive load. Despite the variety of input devices available, their effectiveness in adaptive settings with assistive robotics has yet to be thoroughly assessed. This study explores three different input devices by integrating them into an established XR framework for assistive robotics, evaluating them and providing empirical insights through a preliminary study for future developments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Max Pascher

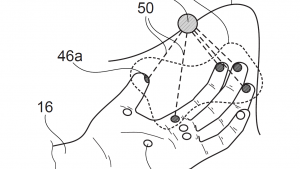

System and Method for Providing an Object-related Haptic Effect Patent

2024.

@patent{Pascher2024b,

title = {System and Method for Providing an Object-related Haptic Effect},

author = {Max Pascher},

url = {https://register.dpma.de/DPMAregister/pat/register?AKZ=1020221221733, DPMAregister

https://register.dpma.de/DPMAregister/pat/PatSchrifteneinsicht?docId=DE102022122173B4, Patentschrift},

year = {2024},

date = {2024-03-07},

urldate = {2024-03-07},

booktitle = {German Patent and Trade Mark Office (DPMA)},

abstract = {Die Erfindung betrifft ein computer-implementiertes Verfahren zum Bereitstellen eines auf ein Objekt (44) bezogenen haptischen Effektes in einer virtuellen oder gemischten Umgebung mittels einer Haptikvorrichtung (12), wobei die Haptikvorrichtung (12) einen an einem Körperteil (16) eines Nutzers tragbaren Träger (18), und mehrere am Träger (18) über eine Fläche (22) des Trägers (18) verteilte und mit einem Steuermodul (14) kommunikationstechnisch verbundene Aktoren (20) zum Bereitstellen eines lokalen haptischen Reizes (46) umfasst, umfassend den Schritt Generieren eines Steuersignales für die Aktoren (20) dergestalt, dass eine Ausgabe der lokalen haptischen Reize (46) einer Teilmenge (48) der Aktoren (20) zeitlich korrespondierend ihrer Nähe zum Objekt (44) erfolgt. Die Erfindung betrifft weiterhin ein Steuermodul (14), das dazu eingerichtet ist, das obige Verfahren durchzuführen. Weiterhin betrifft die Erfindung ein System (10) zum Bereitstellen eines auf ein Objekt (44) bezogenen haptischen Effektes in einer virtuellen oder gemischten Umgebung umfassend eine Haptikvorrichtung (12) und das obige Steuermodul (14).},

howpublished = {DPMAregister},

keywords = {},

pubstate = {published},

tppubtype = {patent}

}

Alia Saad; Max Pascher; Khaled Kassem; Roman Heger; Jonathan Liebers; Stefan Schneegass; Uwe Gruenefeld

Hand-in-Hand: Investigating Mechanical Tracking for User Identification in Cobot Interaction Proceedings Article

In: Proceedings of International Conference on Mobile and Ubiquitous Multimedia (MUM), Vienna, Austria, 2023.

@inproceedings{Saad2023,

title = {Hand-in-Hand: Investigating Mechanical Tracking for User Identification in Cobot Interaction},

author = {Alia Saad and Max Pascher and Khaled Kassem and Roman Heger and Jonathan Liebers and Stefan Schneegass and Uwe Gruenefeld},

doi = {10.1145/3626705.3627771},

year = {2023},

date = {2023-12-03},

urldate = {2023-12-03},

booktitle = {Proceedings of International Conference on Mobile and Ubiquitous Multimedia (MUM)},

address = {Vienna, Austria},

abstract = {Robots play a vital role in modern automation, with applications in manufacturing and healthcare. Collaborative robots integrate human and robot movements. Therefore, it is essential to ensure that interactions involve qualified, and thus identified, individuals. This study delves into a new approach: identifying individuals through robot arm movements. Different from previous methods, users guide the robot, and the robot senses the movements via joint sensors. We asked 18 participants to perform six gestures, revealing the potential use as unique behavioral traits or biometrics, achieving F1-score up to 0.87, which suggests direct robot interactions as a promising avenue for implicit and explicit user identification.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

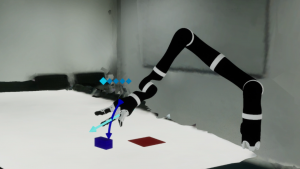

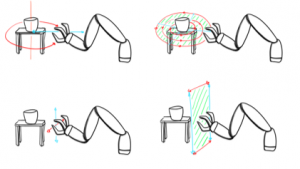

Max Pascher; Kirill Kronhardt; Felix Ferdinand Goldau; Udo Frese; Jens Gerken

In Time and Space: Towards Usable Adaptive Control for Assistive Robotic Arms Proceedings Article

In: RO-MAN 2023 - IEEE International Conference on Robot and Human Interactive Communication, IEEE, 2023.

@inproceedings{Pascher2023c,

title = {In Time and Space: Towards Usable Adaptive Control for Assistive Robotic Arms},

author = {Max Pascher and Kirill Kronhardt and Felix Ferdinand Goldau and Udo Frese and Jens Gerken},

doi = {10.1109/RO-MAN57019.2023.10309381},

year = {2023},

date = {2023-11-13},

urldate = {2023-11-13},

booktitle = {RO-MAN 2023 - IEEE International Conference on Robot and Human Interactive Communication},

publisher = {IEEE},

abstract = {Robotic solutions, in particular robotic arms, are becoming more frequently deployed for close collaboration with humans, for example in manufacturing or domestic care environments. These robotic arms require the user to control several Degrees-of-Freedom (DoFs) to perform tasks, primarily involving grasping and manipulating objects. Standard input devices predominantly have two DoFs, requiring time-consuming and cognitively demanding mode switches to select individual DoFs. Contemporary Adaptive DoF Mapping Controls (ADMCs) have shown to decrease the necessary number of mode switches but were up to now not able to significantly reduce the perceived workload. Users still bear the mental workload of incorporating abstract mode switching into their workflow. We address this by providing feed-forward multimodal feedback using updated recommendations of ADMC, allowing users to visually compare the current and the suggested mapping in real-time. We contrast the effectiveness of two new approaches that a) continuously recommend updated DoF combinations or b) use discrete thresholds between current robot movements and new recommendations. Both are compared in a Virtual Reality (VR) in-person study against a classic control method. Significant results for lowered task completion time, fewer mode switches, and reduced perceived workload conclusively establish that in combination with feedforward, ADMC methods can indeed outperform classic mode switching. A lack of apparent quantitative differences between Continuous and Threshold reveals the importance of user-centered customization options. Including these implications in the development process will improve usability, which is essential for successfully implementing robotic technologies with high user acceptance.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

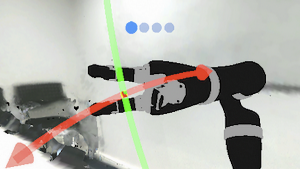

Max Pascher; Uwe Gruenefeld; Stefan Schneegass; Jens Gerken

How to Communicate Robot Motion Intent: A Scoping Review Proceedings Article

In: Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23), ACM New York, NY, USA, 2023.

@inproceedings{Pascher2023,

title = {How to Communicate Robot Motion Intent: A Scoping Review},

author = {Max Pascher and Uwe Gruenefeld and Stefan Schneegass and Jens Gerken},

url = {https://rmi.robot-research.de/, Interactive Data Visualization of the Paper Corpus},

doi = {10.1145/3544548.3580857},

year = {2023},

date = {2023-04-10},

urldate = {2023-04-10},

booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23)},

address = {New York, NY, USA},

organization = {ACM},

abstract = {Robots are becoming increasingly omnipresent in our daily lives, supporting us and carrying out autonomous tasks. In Human-Robot Interaction, human actors benefit from understanding the robot's motion intent to avoid task failures and foster collaboration. Finding effective ways to communicate this intent to users has recently received increased research interest. However, no common language has been established to systematize robot motion intent. This work presents a scoping review aimed at unifying existing knowledge. Based on our analysis, we present an intent communication model that depicts the relationship between robot and human through different intent dimensions (intent type, intent information, intent location). We discuss these different intent dimensions and their interrelationships with different kinds of robots and human roles. Throughout our analysis, we classify the existing research literature along our intent communication model, allowing us to identify key patterns and possible directions for future research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Max Pascher; Til Franzen; Kirill Kronhardt; Uwe Gruenefeld; Stefan Schneegass; Jens Gerken

HaptiX: Vibrotactile Haptic Feedback for Communication of 3D Directional Cues Proceedings Article

In: Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems - Extended Abstract (CHI ’23), ACM New York, NY, USA, 2023.

@inproceedings{Pascher2023b,

title = {HaptiX: Vibrotactile Haptic Feedback for Communication of 3D Directional Cues},

author = {Max Pascher and Til Franzen and Kirill Kronhardt and Uwe Gruenefeld and Stefan Schneegass and Jens Gerken},

doi = {10.1145/3544549.3585601},

year = {2023},

date = {2023-04-09},

urldate = {2023-04-09},

booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems - Extended Abstract (CHI ’23)},

address = {New York, NY, USA},

organization = {ACM},

abstract = {In Human-Computer-Interaction, vibrotactile haptic feedback offers the advantage of being independent of any visual perception of the environment. Most importantly, the user's field of view is not obscured by user interface elements, and the visual sense is not unnecessarily strained. This is especially advantageous when the visual channel is already busy, or the visual sense is limited. We developed three design variants based on different vibrotactile illusions to communicate 3D directional cues. In particular, we explored two variants based on the vibrotactile illusion of the cutaneous rabbit and one based on apparent vibrotactile motion. To communicate gradient information, we combined these with pulse-based and intensity-based mapping. A subsequent study showed that the pulse-based variants based on the vibrotactile illusion of the cutaneous rabbit are suitable for communicating both directional and gradient characteristics. The results further show that a representation of 3D directions via vibrations can be effective and beneficial.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Annalies Baumeister; Max Pascher; Yashaswini Shivashankar; Felix Ferdinand Goldau; Udo Frese; Jens Gerken; Elizaveta Gardo; Barbara Klein; Patrizia Tolle

AI for Simplifying the Use of an Assistive Robotic Arm for People with severe Body Impairments Journal Article

In: Gerontechnology, vol. 21, pp. 5, 2022, ISSN: 1569-1101.

@article{Baumeister2022,

title = {AI for Simplifying the Use of an Assistive Robotic Arm for People with severe Body Impairments},

author = {Annalies Baumeister and Max Pascher and Yashaswini Shivashankar and Felix Ferdinand Goldau and Udo Frese and Jens Gerken and Elizaveta Gardo and Barbara Klein and Patrizia Tolle},

doi = {10.4017/gt.2022.21.s.578.5.sp7},

issn = {1569-1101},

year = {2022},

date = {2022-10-24},

urldate = {2022-10-24},

booktitle = {Proceedings of the13th World Conference of Gerontechnology (ISG 2022)},

journal = {Gerontechnology},

volume = {21},

pages = {5},

abstract = {Assistive robotic arms, e.g., the Kinova JACO, aim to assist people with upper-body disabilities in everyday tasks and thus increase their autonomy (Brose et al. 2010; Beaudoin et al.2019). A long-term survey with seven JACO users showed that they were satisfied with the technology and that JACO had a positive psychosocial impact. Still, the users had some difficulties performing daily activities with the arm, e.g., it took them some time to finish a task (Beaudoin et al. 2019). Herlant et al. claim that the main problem for a user is that mode switching is time-consuming and tiring (Herlant et al. 2017). To tackle this issue, deep neural network(s) will be developed to facilitate the use of the robotic arm. A sensor-based situation recognition will be combined with an algorithm-based control to form an adaptive AI-based control system. The project focuses on three main aspects: 1) A neural network providing suggestions for movement options based on training data generated in virtual reality. 2) Exploring data glasses as a possibility for displaying feedback in a user-centered design process. 3) Elicitation of requirements, risks and ethical system evaluation using a participatory approach.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Max Pascher; Kirill Kronhardt; Til Franzen; Jens Gerken

Adaptive DoF: Concepts to Visualize AI-generated Movements in Human-Robot Collaboration Proceedings Article

In: Proceedings of the 2022 International Conference on Advanced Visual Interfaces (AVI 2022), ACM, NewYork, NY, USA, 2022, ISBN: 978-1-4503-9719-3/22/06.

@inproceedings{Pascher2022b,

title = {Adaptive DoF: Concepts to Visualize AI-generated Movements in Human-Robot Collaboration},

author = {Max Pascher and Kirill Kronhardt and Til Franzen and Jens Gerken},

doi = {10.1145/3531073.3534479},

isbn = {978-1-4503-9719-3/22/06},

year = {2022},

date = {2022-06-06},

urldate = {2022-06-06},

booktitle = {Proceedings of the 2022 International Conference on Advanced Visual Interfaces (AVI 2022)},

publisher = {ACM},

address = {NewYork, NY, USA},

abstract = {Nowadays, robots collaborate closely with humans in a growing number of areas. Enabled by lightweight materials and safety sensors , these cobots are gaining increasing popularity in domestic care, supporting people with physical impairments in their everyday lives. However, when cobots perform actions autonomously, it remains challenging for human collaborators to understand and predict their behavior. This, however, is crucial for achieving trust and user acceptance. One significant aspect of predicting cobot behavior is understanding their motion intent and comprehending how they "think" about their actions. We work on solutions that communicate the cobots AI-generated motion intent to a human collaborator. Effective communication enables users to proceed with the most suitable option. We present a design exploration with different visualization techniques to optimize this user understanding, ideally resulting in increased safety and end-user acceptance.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Kirill Kronhardt; Stephan Rübner; Max Pascher; Felix Goldau; Udo Frese; Jens Gerken

Adapt or Perish? Exploring the Effectiveness of Adaptive DoF Control Interaction Methods for Assistive Robot Arms Journal Article

In: Technologies, vol. 10, iss. 1, no. 30, 2022, ISSN: 2227-7080.

@article{Kronhardt2022,

title = {Adapt or Perish? Exploring the Effectiveness of Adaptive DoF Control Interaction Methods for Assistive Robot Arms},

author = {Kirill Kronhardt and Stephan Rübner and Max Pascher and Felix Goldau and Udo Frese and Jens Gerken},

url = {https://youtu.be/AEK4AOQKz1k},

doi = {10.3390/technologies10010030},

issn = {2227-7080},

year = {2022},

date = {2022-02-14},

urldate = {2022-02-14},

journal = {Technologies},

volume = {10},

number = {30},

issue = {1},

abstract = {Robot arms are one of many assistive technologies used by people with motor impairments.Assistive robot arms can allow people to perform activities of daily living (ADL) involving graspingand manipulating objects in their environment without the assistance of caregivers. Suitable inputdevices (e.g., joysticks) mostly have two Degrees of Freedom (DoF), while most assistive robot armshave six or more. This results in time-consuming and cognitively demanding mode switches tochange the mapping of DoFs to control the robot. One option to decrease the difficulty of controllinga high-DoF assistive robot arm using a low-DoF input device is to assign different combinations ofmovement-DoFs to the device’s input DoFs depending on the current situation (adaptive control). Toexplore this method of control, we designed two adaptive control methods for a realistic virtual 3Denvironment. We evaluated our methods against a commonly used non-adaptive control method thatrequires the user to switch controls manually. This was conducted in a simulated remote study thatused Virtual Reality and involved 39 non-disabled participants. Our results show that the numberof mode switches necessary to complete a simple pick-and-place task decreases significantly whenusing an adaptive control type. In contrast, the task completion time and workload stay the same. Athematic analysis of qualitative feedback of our participants suggests that a longer period of trainingcould further improve the performance of adaptive control methods.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Max Pascher; Kirill Kronhardt; Til Franzen; Uwe Gruenefeld; Stefan Schneegass; Jens Gerken

My Caregiver the Cobot: Comparing Visualization Techniques to Effectively Communicate Cobot Perception to People with Physical Impairments Journal Article

In: Sensors, vol. 22, iss. 3, no. 755, 2022, ISSN: 1424-8220.

@article{Pascher2022,

title = {My Caregiver the Cobot: Comparing Visualization Techniques to Effectively Communicate Cobot Perception to People with Physical Impairments},

author = {Max Pascher and Kirill Kronhardt and Til Franzen and Uwe Gruenefeld and Stefan Schneegass and Jens Gerken},

doi = {10.3390/s22030755},

issn = {1424-8220},

year = {2022},

date = {2022-01-19},

urldate = {2022-01-19},

journal = {Sensors},

volume = {22},

number = {755},

issue = {3},

abstract = {Nowadays, robots are found in a growing number of areas where they collaborate closely with humans. Enabled by lightweight materials and safety sensors, these cobots increasingly gain popularity in domestic care, where they support people with physical impairments in their everyday lives. However, when cobots perform actions autonomously, it remains challenging for human collaborators to understand and predict their behavior, which is crucial for achieving trust and user acceptance. One significant aspect of predicting cobot behavior is understanding their perception and comprehending how they "see" the world. To tackle this challenge, we compared three different visualization techniques for Spatial Augmented Reality. All of these communicate cobot perception by visually indicating which objects in the cobot's surrounding have been identified by their sensors. We compared the well-established visualizations Wedge and Halo against our proposed visualization Line in a remote user study with participants suffering from physical impairments. In a second remote study, we validated these findings with a broader non-specific user base. Our findings show that Line, a lower complexity visualization, results in significantly faster reaction times compared to Halo, and lower task load compared to both Wedge and Halo. Overall, users prefer Line as a more straightforward visualization. In Spatial Augmented Reality, with its known disadvantage of limited projection area size, established off-screen visualizations are not effective in communicating cobot perception and Line presents an easy-to-understand alternative.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Annalies Baumeister; Elizaveta Gardo; Patrizia Tolle; Barbara Klein; Max Pascher; Jens Gerken; Felix Goldau; Yashaswini Shivashankar; Udo Frese

The Importance of Participatory Design for the Development of Assistive Robotic Arms. Initial Approaches and Experiences in the Research Projects MobILe and DoF-Adaptiv Proceedings Article

In: Proceedings of Connected Living : international and interdisciplinary conference (2021), Frankfurt am Main, Germany, 2021.

@inproceedings{Baumeister2021,

title = {The Importance of Participatory Design for the Development of Assistive Robotic Arms. Initial Approaches and Experiences in the Research Projects MobILe and DoF-Adaptiv},

author = {Annalies Baumeister and Elizaveta Gardo and Patrizia Tolle and Barbara Klein and Max Pascher and Jens Gerken and Felix Goldau and Yashaswini Shivashankar and Udo Frese},

doi = {10.48718/8p7x-cw14},

year = {2021},

date = {2021-10-08},

urldate = {2021-10-08},

booktitle = {Proceedings of Connected Living : international and interdisciplinary conference (2021)},

address = {Frankfurt am Main, Germany},

abstract = {This Article introduces two research projects towards assistive robotic arms for people with severe body impairments. Both projects aim to develop new control and interaction designs to promote accessibility and a better performance for people with functional losses in all four extremities, e.g. due to quadriplegic or multiple sclerosis. The project MobILe concentrates on using a robotic arm as drinking aid and controlling it with smart glasses, eye-tracking and augmented reality. A user oriented development process with participatory methods were pursued which brought new knowledge about the life and care situation of the future target group and the requirements a robotic drinking aid needs to meet. As a consequence the new project DoF-Adaptiv follows an even more participatory approach, including the future target group, their family and professional caregivers from the beginning into decision making and development processes within the project. DoF-Adaptiv aims to simplify the control modalities of assistive robotic arms to enhance the usability of the robotic arm for activities of daily living. lo decide on exemplary activities, like eating or open a door, the future target group, their family and professional caregivers are included in the decision making process. Furthermore all relevant stakeholders will be included in the investigation of ethical, legal and social implications as well as the identification of potential risks. This article will show the importance of the participatory design for the development and research process in MobILe and DoF-Adaptiv.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Florian Borsum; Max Pascher; Jonas Auda; Stefan Schneegass; Gregor Lux; Jens Gerken

Stay on Course in VR: Comparing the Precision of Movement betweenGamepad, Armswinger, and Treadmill Proceedings Article

In: Mensch und Computer 2021 - Tagungsband, Gesellschaft für Informatik e.V., Bonn, Germany, 2021, ISBN: 978-1-4503-8645-6/21/09.

@inproceedings{Borsum2021,

title = {Stay on Course in VR: Comparing the Precision of Movement betweenGamepad, Armswinger, and Treadmill},

author = {Florian Borsum and Max Pascher and Jonas Auda and Stefan Schneegass and Gregor Lux and Jens Gerken},

doi = {10.1145/3473856.3473880},

isbn = {978-1-4503-8645-6/21/09},

year = {2021},

date = {2021-09-05},

urldate = {2021-09-05},

booktitle = {Mensch und Computer 2021 - Tagungsband},

publisher = {Gesellschaft für Informatik e.V.},

address = {Bonn, Germany},

abstract = {In diesem Beitrag wird untersucht, inwieweit verschiedene Formen von Fortbewegungstechniken in Virtual Reality UmgebungenEinfluss auf die Präzision bei der Interaktion haben. Dabei wurden insgesamt drei Techniken untersucht: Zwei der Techniken integrierendabei eine körperliche Aktivität, um einen hohen Grad an Realismus in der Bewegung zu erzeugen (Armswinger, Laufstall). Alsdritte Technik wurde ein Gamepad als Baseline herangezogen. In einer Studie mit 18 Proband:innen wurde die Präzision dieser dreiFortbewegungstechniken über sechs unterschiedliche Hindernisse in einem VR-Parcours untersucht. Die Ergebnisse zeigen, dassfür einzelne Hindernisse, die zum einen eine Kombination aus Vorwärts- und Seitwärtsbewegung erfordern (Slalom, Klippe) sowieauf Geschwindigkeit abzielen (Schiene), der Laufstall eine signifikant präzisere Steuerung ermöglicht als der Armswinger. Auf dengesamten Parcours gesehen ist jedoch kein Eingabegerät signifikant präziser als ein anderes. Die Benutzung des Laufstalls benötigtzudem signifikant mehr Zeit als Gamepad und Armswinger. Ebenso zeigte sich, dass das Ziel, eine reale Laufbewegung 1:1 abzubilden,auch mit einem Laufstall nach wie vor nicht erreicht wird, die Bewegung aber dennoch als intuitiv und immersiv wahrgenommenwird.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Max Pascher; Annalies Baumeister; Stefan Schneegass; Barbara Klein; Jens Gerken

Recommendations for the Development of a Robotic Drinking and Eating Aid - An Ethnographic Study Proceedings Article

In: Carmelo Ardito, Rosa Lanzilotti, Alessio Malizia, Helen Petrie, Antonio Piccinno, Giuseppe Desolda, Kori Inkpen (Ed.): Human-Computer Interaction – INTERACT 2021, Springer, Cham, 2021, ISBN: 978-3-030-85623-6.

@inproceedings{Pascher2021,

title = {Recommendations for the Development of a Robotic Drinking and Eating Aid - An Ethnographic Study },

author = {Max Pascher and Annalies Baumeister and Stefan Schneegass and Barbara Klein and Jens Gerken},

editor = {Carmelo Ardito, Rosa Lanzilotti, Alessio Malizia, Helen Petrie, Antonio Piccinno, Giuseppe Desolda, Kori Inkpen},

doi = {10.1007/978-3-030-85623-6_21},

isbn = {978-3-030-85623-6},

year = {2021},

date = {2021-09-01},

urldate = {2021-09-01},

booktitle = {Human-Computer Interaction – INTERACT 2021},

publisher = {Springer, Cham},

abstract = {Being able to live independently and self-determined in one's own home is a crucial factor for human dignity and preservation of self-worth. For people with severe physical impairments who cannot use their limbs for every day tasks, living in their own home is only possible with assistance from others. The inability to move arms and hands makes it hard to take care of oneself, e. g. drinking and eating independently. In this paper, we investigate how 15 participants with disabilities consume food and drinks. We report on interviews, participatory observations, and analyzed the aids they currently use. Based on our findings, we derive a set of recommendations that supports researchers and practitioners in designing future robotic drinking and eating aids for people with disabilities.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Stephanie Arévalo-Arboleda; Max Pascher; Annalies Baumeister; Barbara Klein; Jens Gerken

Reflecting upon Participatory Design in Human-Robot Collaboration for People with Motor Disabilities: Challenges and Lessons Learned from Three Multiyear Projects Proceedings Article

In: The 14th PErvasive Technologies Related to Assistive Environments Conference - PETRA 2021, ACM 2021, ISBN: 978-1-4503-8792-7/21/06.

@inproceedings{Arévalo-Arboleda2021,

title = {Reflecting upon Participatory Design in Human-Robot Collaboration for People with Motor Disabilities: Challenges and Lessons Learned from Three Multiyear Projects},

author = {Stephanie Arévalo-Arboleda and Max Pascher and Annalies Baumeister and Barbara Klein and Jens Gerken},

doi = {10.1145/3453892.3458044},

isbn = {978-1-4503-8792-7/21/06},

year = {2021},

date = {2021-06-29},

urldate = {2021-06-29},

booktitle = {The 14th PErvasive Technologies Related to Assistive Environments Conference - PETRA 2021},

organization = {ACM},

abstract = {Human-robot technology has the potential to positively impact the lives of people with motor disabilities. However, current efforts have mostly been oriented towards technology (sensors, devices, modalities, interaction techniques), thus relegating the user and their valuable input to the wayside. In this paper, we aim to present a holistic perspective of the role of participatory design in Human-Robot Collaboration (HRC) for People with Motor Disabilities (PWMD). We have been involved in several multiyear projects related to HRC for PWMD, where we encountered different challenges related to planning and participation, preferences of stakeholders, using certain participatory design techniques, technology exposure, as well as ethical, legal, and social implications. These challenges helped us provide five lessons learned that could serve as a guideline to researchers when using participatory design with vulnerable groups. In particular, young researchers who are starting to explore HRC research for people with disabilities.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}